The debut of OpenAI’s language processing tool, ChatGPT, caused waves within the artificial intelligence field, and the tech industry as a whole. This technology is considered the latest game changer. More and more companies have started using ChatGPT (or other similar AI language processing solutions) as part of their business in order to save time and costs, either by way of implementing the tool into their products or during other day-to-day processes. Such significant change in the field has forced us as attorneys to examine closely the use of ChatGPT and the risks entailed in it.

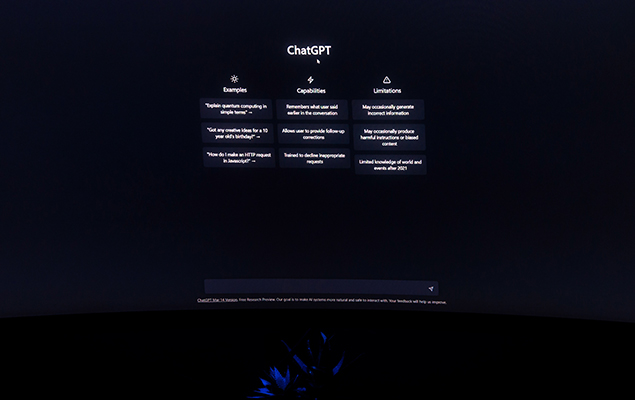

But first, what is ChatGPT? ChatGPT is tool capable of understanding human-like data. It provides its users with human-like responses for basically everything they might ask, and can create on-demand materials, such as text and creative content. However simple this might sound, the use of ChatGPT entails a number of legal and practical risks that companies should evaluate and consider prior to allowing its use within an organization or a software.

Infringement of Rights

Although ChatGPT can probably give you an answer on anything you ask for, outputs generated by AI models can be generic and indistinctive. Namely, there is no guarantee the output you receive and thereafter use in your product will not also be used by someone else. OpenAI’s terms of use specifically address this issue, and state that ownership of the outputs will belong to the user, unless another user receives a similar output. Therefore, any output received by ChatGPT can be in question, and there is no certainty the user has sole ownership. Such questions regarding ownership and copyright infringement can obviously cause real difficulties in protecting proprietary rights in such outputs, or any additional work based on such outputs.

In addition, there are also a number of questions with respect to the database used to train the AI of ChatGPT, on which it generates its outputs. ChatGPT is trained on a vast amount of information and data, including books, articles, and other written materials. Such training information and data is likely to include copyrighted works of third parties.

Accessing and utilizing data, information, and other materials available on the internet without an appropriate license or permission may be deemed as copyright infringement. As a result, any derivative works, updates, or improvements created from the outputs generated by ChatGPT (while based on such materials) may also infringe on the rights of third parties. This means users of ChatGPT are at risk of facing legal action from third parties alleging their rights have been violated.

This is not just a potential risk. Currently, there are a number of pending cases in the US and the UK against the operators of AI language processing tools, such as OpenAI and Microsoft (GitHub), claiming copyright infringement.

For instance, as part of a class action in the US, it was claimed that an output of a software code generated by an AI language processing tool, that was based on open-source materials available online (or was even copied entirely) infringed the rights of the creators of such open-source materials, since the output did not refer to the terms of the license of such open source.

In a case under adjudication in the UK, a photography agency filed a claim of copyright infringement against the owner of an AI processing tool. The agency claims the AI tool copied millions of pictures owned by the agency and used them to train its AI. In this case, the outputs generated even contained a watermark that was used in all of the agency’s pictures, which clearly shows that such pictures were used, as the AI tool could not have known how to make this specific watermark without learning it from the agency’s pictures.

Nevertheless, an opinion published by the Israeli Ministry of Justice regarding the use of copyrighted materials for the purpose of machine learning posits that it is generally legal to practice AI models on copyrighted materials, subject to certain limitations. Such opinion also states that there are no specific exclusions under Israeli law for materials produced by AI tools, and that any infringement claims in that regard will be examined according to existing law, similar to how other copyrights infringement claims would be examined. However, we note that this is only an opinion, which has yet to be discussed in court. Moreover, the opinion only makes reference to the learning process of the AI model, and does not address the outputs generated by such model.

Accuracy

As outputs of ChatGPT are based on the training data and information available to it, it is inherently subject to inaccuracies. ChatGPT is able to provide outputs only upon the information it has at a given time, without a deep understanding of the matter.

In the event the training data and information contains mistakes or biases, the same will be reflected in the outputs. In addition, if the training data is limited with respect to the requested output, the output might be biased and misleading. If any subsequent update or change occurs in reality, but is not reflected in the training database, it will also not be reflected in the outputs. (As of May 2023, ChatGPT itself state that its training data is limited up to the information available as of September 2021.) A user of ChatGPT has no ability to control the data that is the base of the output requested, select a certain database, or refer to a certain algorithm. Therefore, any output generated by ChatGPT should be considered carefully, rather that relied on blindly.

Data Security/Privacy

Due to ChatGPT’s reliance on a vast amount of data and information available on the internet, it is likely that some of the data contains personal data of individuals. Therefore, the use and processing of ChatGPT’s output may constitute a violation of various data protection laws. We note that these laws have extraterritorial application and must be followed. However, OpenAI’s terms of use place the responsibility for compliance with these laws and regulations on the user. In accordance with such terms of use, users must confirm they are processing personal data in accordance with applicable law. Additionally, OpenAI’s Data Processing Addendum (DPA) does not automatically apply to all users, and each user must explicitly request to be subject to the DPA for it to be applicable to them.

The potential breach of data security requirements can discourage suppliers or customers from doing business with companies whose product is based on generative AI tool, as use of the product may lead to the exposure of these suppliers’ or customers’ information to ChatGPT without restriction. Such disclosure may result in the product owner continually violating the data security requirements of third parties, and being unable to enter into contracts with suppliers or customers as a result.

Liability

As previously mentioned, any information or data uploaded to ChatGPT, and any output generated by ChatGPT, are under the ownership of the user, for as long as it complies with OpenAI’s terms of use.

Although it might sound good, this actually means that ChatGPT takes no liability on any output, and such is provided “as-is.” The sole liability for any such outputs lays with the user, including toward OpenAI itself (as a side note, users has no limitation of their liability with respect to the use of ChatGPT. Meaning, in the event OpenAI will have any claim against a user, the amount OpenAI can demand is unlimited).

In addition, if you think recovery from OpenAI could be the solution for all of the above, you should know that OpenAI’s liability toward its users is limited to the greater of USD 100 or the aggregated amount paid by the user for the service during the 12 months prior to the claim against OpenAI. Therefore, treating the outputs generated by ChatGPT with caution might be the best course of action.

ChatGPT’s Legal-Commercial Aspects

The use of ChatGPT involves uncertainties and can raise multiple legal and practical questions. Using outputs generated by ChatGPT as part of your product could even raise red flags during a due diligence inquiry held as part of an equity investment or an M&A transaction.

The use of ChatGPT should be subject to a case-by-case analysis, especially before engaging in any commercial use of the generated output. It is crucial to evaluate the relevant risks and take measures to minimize them as much as possible and protect intellectual property rights, whether before initiating an investment transaction or implementing an AI-based tool as part of the company’s regular operations.

While the usefulness of ChatGPT cannot be denied, nor can the fact that technological development cannot be stopped, companies should still exercise caution when using ChatGPT as part of their core product development. Although ChatGPT can be an excellent tool for daily work procedures and office tasks, incorporating it into core product development can lead to unnecessary complications, particularly in the absence of stable regulations. It is therefore recommended that companies carefully assess the potential risks and benefits before deciding to use ChatGPT in this context.

***

Barnea Jaffa Lande’s legal team is happy to answer questions regarding developing, using and implementing AI language processing solutions.

Adv. Inbar Katzir is an associate in the firm’s Corporate Department.